Racial categories in machine learning

Sebastian Benthall and Bruce D. Haynes. 2019. Racial categories in machine learning. In Proceedings of the Conference on Fairness, Accountability, and Transparency (FAT* '19). Association for Computing Machinery, New York, NY, USA, 289–298. DOI:https://doi.org/10.1145/3287560.3287575The authors argue that fairness metrics for racial categories in machine learning largely ignore that race is a socially constructed political category. When formalizing fairness into equations, there has been little discussion of how protected class variables are decided and assigned. They highlight a dilemma in design approaches for machine learning: either ignore racial categories, thereby reifying inequality by no longer measuring difference, or consciously embed race but thereby reifying racial categories themselves. They instead argue for a third approach of preceding human-based fairness decisions with unsupervised learning, detecting patterns of segregation without anchoring racial categories. They state that race, as an unstable social and political category, is unreliable for "ground truth" that supervised ML models rely on.

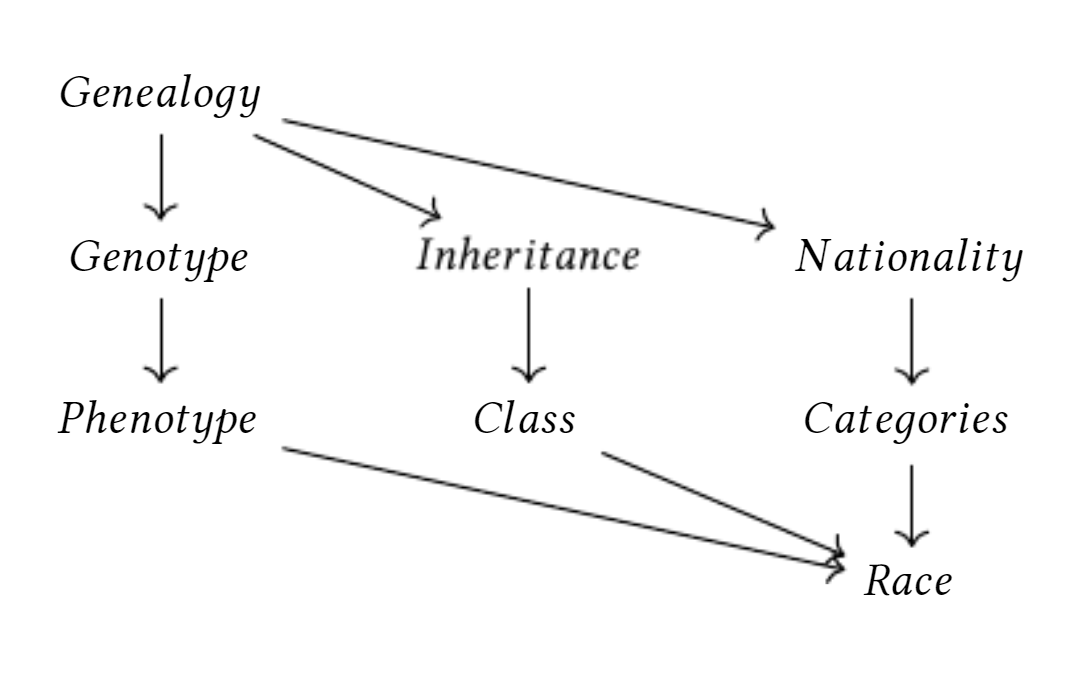

They highlight ways that racial identification is ascribed to the body. For example, through biometric properties, like associating phenotypes with specific races, despite phenotype being complexly related to racial categories. They offer a diagram of the relationships they identify.

Heuristics for Evaluating Racial Categories

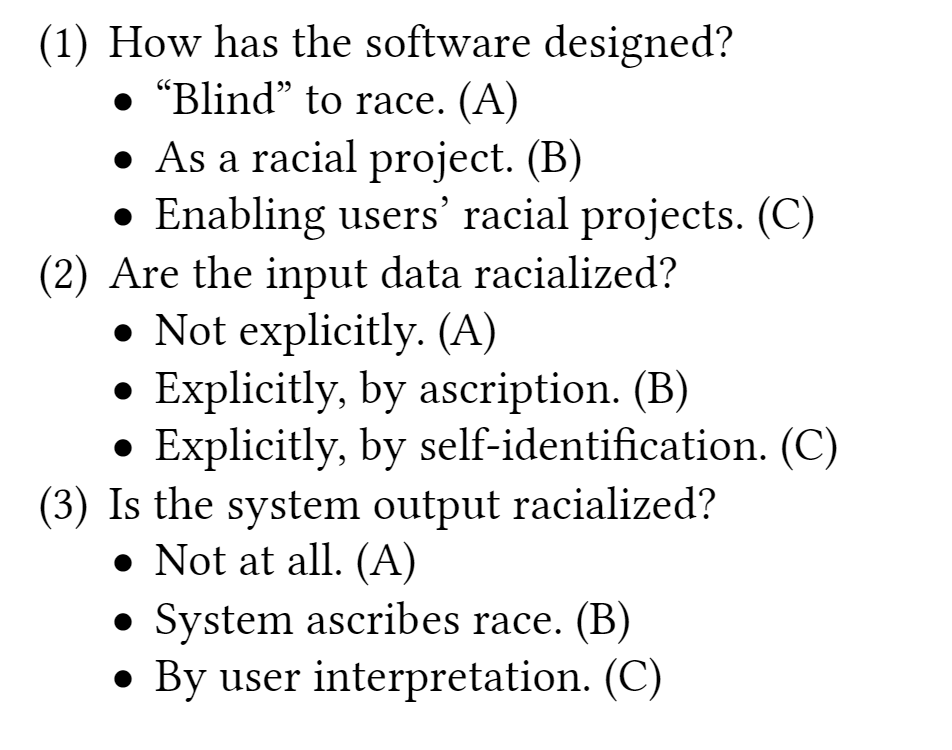

They propose heuristics for evaluating how "racial projects" are mapped onto machine learning - in terms of being racist, anti-racist, or race ignorant.In evaluating a software, they recommend asking how it was designed:

The authors propose inferred race-like categories rather than racial categories, which they argue are "adaptive to realpatterns of demographic segregation, this proposal aims to addresshistoric racial segregation without reproducing the political con-struction of racial categories."