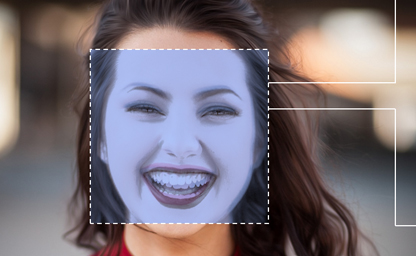

How We’ve Taught Algorithms to See Identity

The outputs produced by facial analysis systems are premised on their training and evaluation data: the images used to “teach” the system what a subject looks like. In our study, we analyze race and gender in training databases from a critical discursive perspective. Specifically, we investigate how race and gender are codified into 92 image databases, many of which are publicly accessible and widely used in computer vision research.